Наши популярные онлайн курсы

Hi everyone, we are up and running and welcome to our very exciting webinar called Future of Risk Management, Powered by AI. I’m Alex Sidorenko, and today I’m thrilled to be joined by Alex Glebov and Brian McGough. Together, we’re going to explore some truly groundbreaking opportunities in the risk management space, all driven by advancements in AI.

When I first met Alex and Brian, we began exchanging ideas about automating processes within our organizations. The innovations they’ve implemented and the projects they are currently working on blew my mind. Immediately, I knew we needed to share these ideas with the broader risk awareness audience. So, thank you for joining us in this live, interactive webinar. Read this summary or watch the replay on Risk Awareness Week website, YouTube, or LinkedIn.

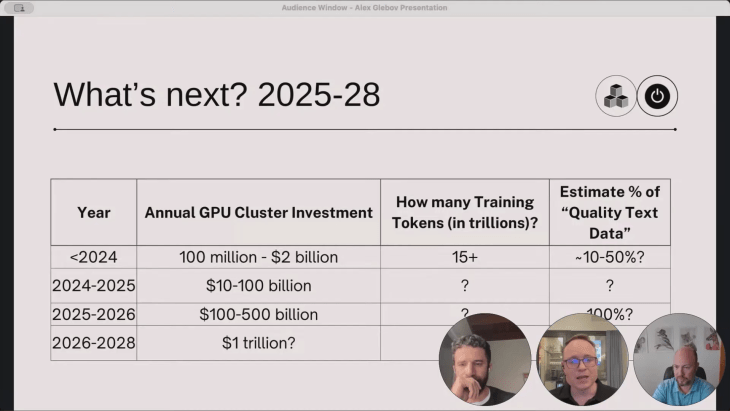

Alex Glebov is the CTO at an AI-powered company, and he’s here to deliver some fascinating insights on where AI stands today. From exploring large language models (LLMs) to understanding their limitations and adoption strategies, Alex will guide us through a technical yet accessible journey.

Understanding AI and LLMs

What are Large Language Models?

Large language models, or LLMs, like ChatGPT fall under the category of generative AI. These models are trained on massive datasets and are designed to produce text outputs that are highly relevant and contextually appropriate based on user queries.

“Imagine if you spent every day of your life reading at 250 words per minute for eight hours a day without interruption for 80 years. You would only read 0.03% of the LLM training data of Lama 3.” — Alex Glebov

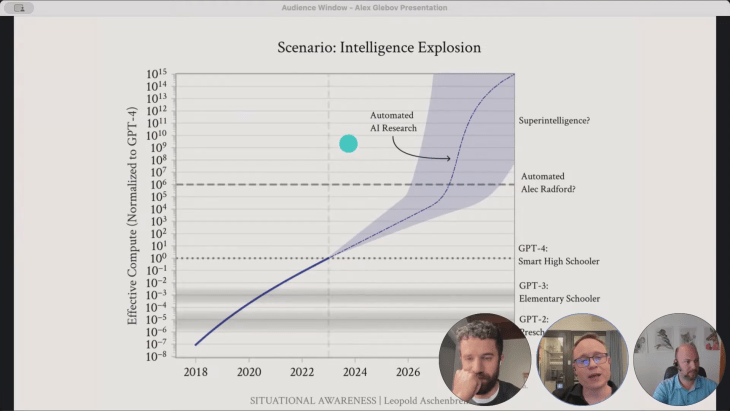

A brief history and evolution

AI and machine learning have been around for decades, but one of the significant breakthroughs came in 2017 with the release of the paper “Attention is All You Need.” This introduced the transformer architecture that dramatically improved the efficiency and performance of LLMs, reducing costs and making them more accessible.

In November 2022, OpenAI released ChatGPT, which saw unprecedented user adoption—reaching 100 million users in just two months. This rapid growth highlighted the enormous potential and public interest in generative AI technologies.

Current landscape and usage of AI

Adoption and reach

ChatGPT set the stage for what is possible with LLMs, achieving over 600 million visits per month. It’s not just popular in the United States; there’s significant adoption in countries like India, Brazil, Kenya, and Mexico.

Real-world applications

Users around the world are leveraging ChatGPT and similar models for various applications, from coding assistance to drafting legal documents. For those of us in the risk management space, the possibilities are endless.

Here’s a quick poll for you: Have you used ChatGPT in your work? What are some of your use cases? Go to replay page to see how participants voted.

Exploring the limitations of LLMs

While the capabilities of LLMs are impressive, they are not without limitations:

- Input token limit: Currently around 128,000 tokens for ChatGPT, which means you can’t input an entire textbook at once.

- Response token limit: Typically up to 4,000 tokens, limiting the length of the response you can generate in a single query.

- Hallucinations: AI models can generate inaccurate information. Several tools are trying to mitigate this by adding double-checking features.

- Inconsistency: Due to the temperature parameter, responses can vary, making it tricky to get consistent results.

- No true reasoning: While LLMs can generate human-like text, they don’t possess true reasoning capabilities.

- Training cut off: Models are only as current as their latest training data, which may not include the most recent information.

- Cost: Depending on scale, token costs can add up, although many models offer significant value for money.

Feel free to add any limitations you’ve encountered in the chat. It’s good to share and learn from each other.

The new wave of LLMs

There has been a surge of new LLMs since OpenAI released its models. Here are some notable ones based on my daily work:

- Claude 3.5 by Anthropic: Excellent for coding and client work.

- GPT-4 by OpenAI: Still a top performer.

- Llama 3.1 by Meta: An exciting open-source model with great potential.

- Gemini 2 by Google: Another strong contender.

August 2024 marks a unique timeline where both closed-source and open-source models are showing comparable performances.

Practical AI adoption strategies

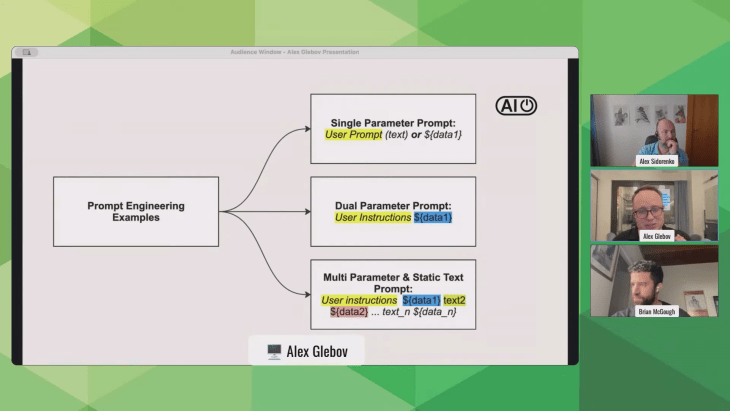

Here’s where we get to the nitty-gritty of integrating AI into your workflow. Understanding and adopting AI involves several concepts:

API integration

APIs allow you to interact with models like ChatGPT programmatically. Here’s a simple example:

{"model": "gpt-4-turbo","prompt": "Generate a risk analysis for a new project in the finance sector.","tokens": 1000}Prompt engineering

Effective prompts can drastically improve the quality of AI responses. Experiment with different structures to find what works best.

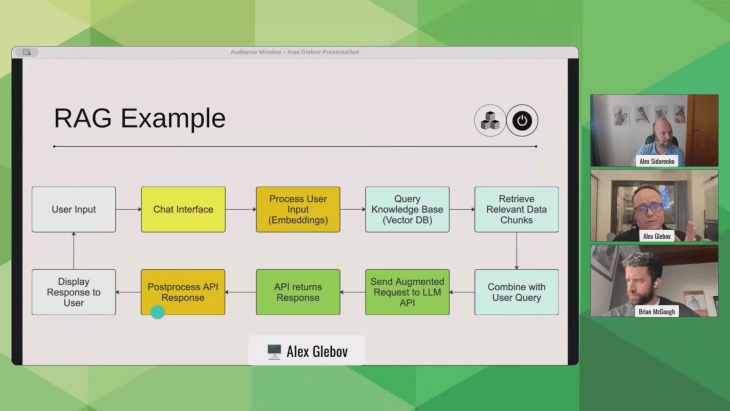

Retrieval-Augmented Generation (RAG)

RAG helps AI models augment responses with external data. Here’s a simplified flow:

- User input is converted into an embedding.

- The system retrieves relevant chunks from a knowledge database.

- These chunks are added to the query sent to the AI.

- The AI integrates this information to generate a response.

Function calling

LLMs can also execute predefined functions based on user inputs, automating a wide range of tasks.

import openairesponse = openai.Completion.create(model="gpt-4-turbo",prompt="Generate a PDF report on risk factors for the tech industry.",functions=["generate_pdf"])Multi-agent systems

Multi-agent systems like AutoGen can simulate different roles within an organization. These frameworks make multiple API calls, integrating responses to produce more nuanced outputs.

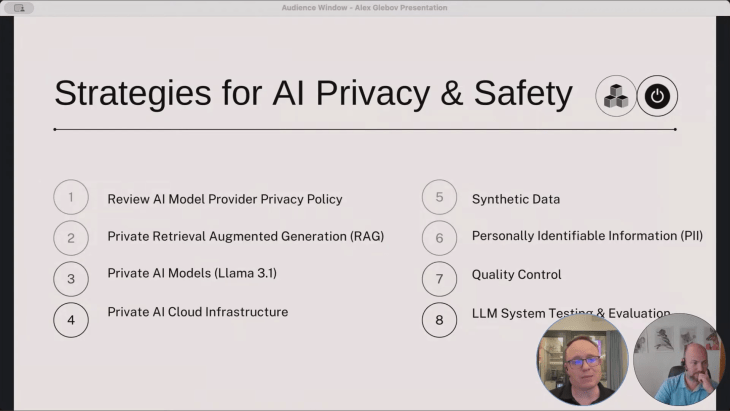

AI privacy and safety strategies

When working with AI, data privacy is crucial:

- Read privacy policies: Ensure you understand the data usage policies of the AI models you integrate.

- Set up private RAG systems: Keep your databases separate and only pull in relevant chunks.

- Use private AI models: Host these models on secure infrastructure accessible only to authorized users.

- Quality control: Continuously test and validate the data.

- Document everything: Maintain transparency to build a risk-aware culture.

When to integrate AI

“Risk management is not about identifying, assessing, and mitigating risks; it’s about changing how the company operates and making decisions with risks in mind.”

It’s either too early or too late when it comes to AI integration, but the key is to start. Each journey begins with a single step, and as AI continues to evolve, having an infrastructure in place will allow you to capitalize on new advancements quickly.

If you’re interested in learning more about integrating AI into your risk management processes, consider becoming a volunteer to test and provide feedback on our ongoing projects. Add a plus sign (+) in the chat, and we’ll reach out to you.

The future of risk management is AI-powered, and we’re just at the beginning of this exciting journey. Enhance your decision-making processes, integrate risk analysis into your business workflows, and stay ahead of the curve.

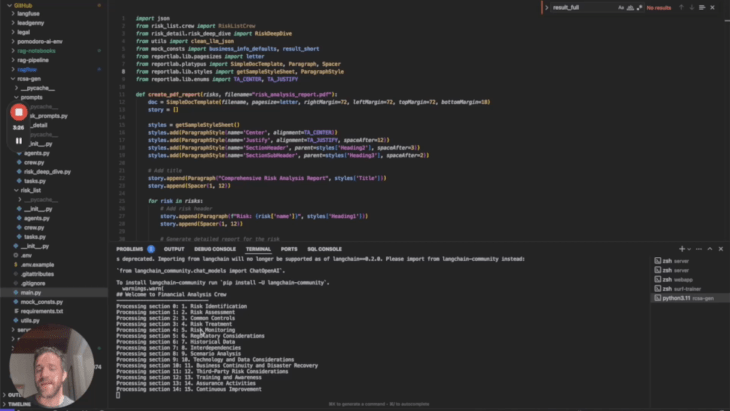

Case study on AI risk assessment

Brian presented an interesting case study on a proof of concept risk assessment tool he has been working on. This tool takes basic information about a business as input – name, industry, size, location, existing controls etc. – and outputs a detailed risk assessment report with a prioritized list of risks and insights into each one.

Some key aspects:

- It breaks the process into generating the risk list, deep diving into each risk, and compiling the findings into a final report

- Leverages large language models to extract relevant information from documentation, online sources, calculations etc.

- Has different “agents” like a manager, analyst, challenger that simulate roles and conversations

- Produces the report in a readable PDF format

This is a great example of how AI can augment risk management workflows. The tool automates manually intensive tasks, leverages both public and private data sources, and formats outputs for easy consumption. As Brian mentioned, it can be customized for different use cases with some additional engineering work. Exciting to see these concepts applied in practice!

Let me know if you have any other questions on the case study or potential applications in your organization. I’m happy to discuss further.

RISK-ACADEMY offers online courses

+

Informed Risk Taking

Learn 15 practical steps on integrating risk management into decision making, business processes, organizational culture and other activities!

$149,99$49,99

+

Advanced Risk Governance

This course gives guidance, motivation, critical information, and practical case studies to move beyond traditional risk governance, helping ensure risk management is not a stand-alone process but a change driver for business.

$795